In the complex realm of SEO, where every directive influences search engine behavior, robots.txt emerges as a critical tool for website owners to guide web crawlers and control the indexing of their content. In this guide, we’ll unravel the mysteries of robots.txt, exploring what it is, how it works, and its impact on your website’s search engine performance.

Understanding Robots.txt: The Gatekeeper of Web Crawling

What is Robots.txt?

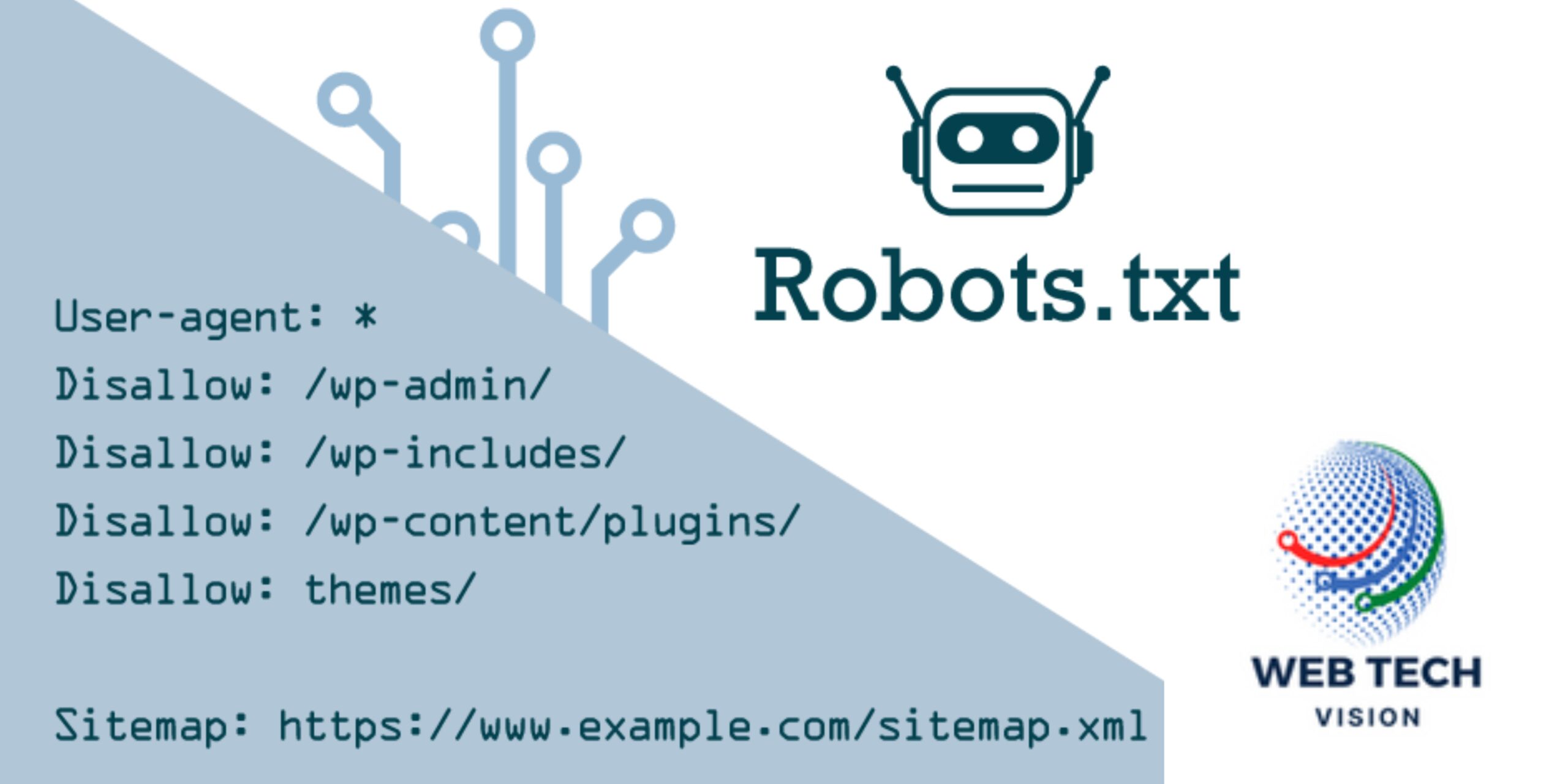

Robots.txt is a plain text file that website owners create to provide instructions to web crawlers, informing them which parts of the site should be crawled and which parts should be ignored. It serves as a virtual “No Entry” sign for search engine bots, helping to control the flow of information that is indexed.

How Does Robots.txt Work?

When a search engine bot arrives at a website, it first checks for the presence of a robots.txt file in the website’s root directory. If found, the bot reads the directives contained within the file to understand which areas of the site it is allowed to crawl and index.

Key Components of Robots.txt:

- User-agent: Specifies which web crawlers or user agents the directives apply to. For example, Googlebot, Bingbot, or specific bots from other search engines.makefileCopy code

User-agent: Googlebot - Disallow: Instructs bots not to crawl specific areas of the site. Multiple directives can be used for different sections.javascriptCopy code

Disallow: /private/ - Allow: Permits bots to crawl specific areas even if a broader Disallow directive is present.javascriptCopy code

Allow: /public/ - Sitemap: Informs search engines about the location of the XML sitemap, providing additional guidance for crawling and indexing.arduinoCopy code

Sitemap: https://www.example.com/sitemap.xml

Best Practices for Robots.txt:

- Ensure Correct Placement: The robots.txt file should be placed in the root directory of your website to be easily accessible to search engine bots.

- Use Disallow Sparingly: While robots.txt provides control over crawling, excessive use of Disallow directives may unintentionally block important content from being indexed.

- Include Sitemap Information: If available, include a Sitemap directive pointing to your XML sitemap. This assists search engines in understanding the structure of your site.

- Regularly Update: As your site evolves, update the robots.txt file to reflect changes. Regularly check for errors and ensure directives accurately represent your site’s structure.

Conclusion: Navigating SEO Waters with Robots.txt

In the intricate dance between website owners and search engines, robots.txt emerges as a tool for controlled exploration. By carefully crafting directives within this file, you guide web crawlers through the labyrinth of your website, ensuring they prioritize essential content and respect your privacy settings. As you embark on your SEO journey, let robots.txt be the gatekeeper that facilitates a harmonious relationship between your website and the ever-curious search engine bots.